The GPU for Generative AI and HPC

The NVIDIA H200 Tensor Core GPU supercharges generative AI and high-performance computing (HPC) workloads with game-changing performance and memory capabilities. As the first GPU with HBM3e, the H200??s larger and faster memory fuels the acceleration of generative AI and large language models (LLMs) while advancing scientific computing for HPC workloads

NVIDIA Supercharges Hopper, the World??s Leading AI Computing Platform

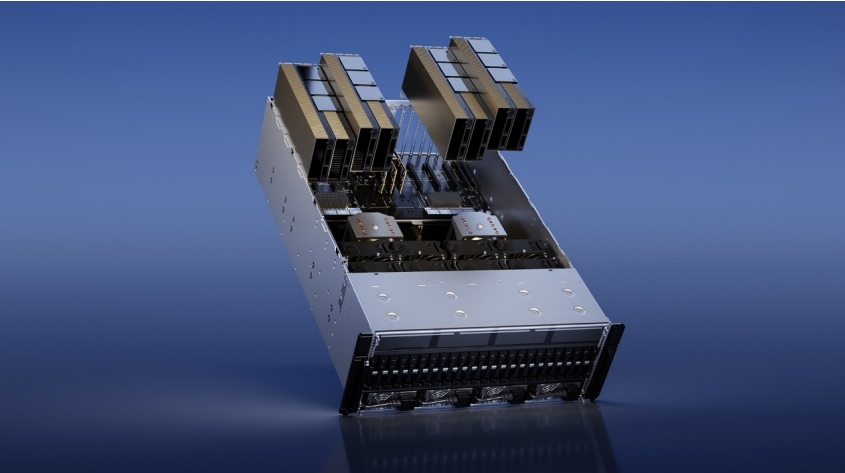

The NVIDIA HGX H200 features the NVIDIA H200 Tensor Core GPU with advanced memory to handle massive amounts of data for generative AI and high-performance computing workloads.

Higher Performance With Larger, Faster Memory

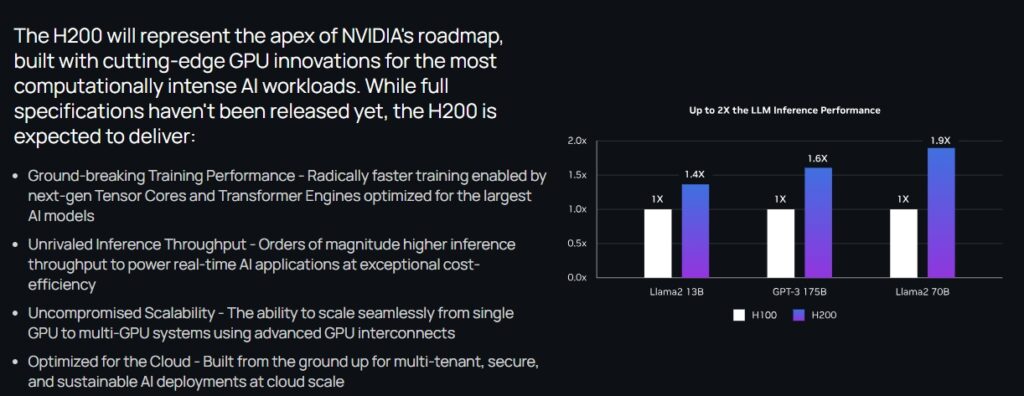

Based on the?NVIDIA Hopper? architecture, the NVIDIA H200 is the first GPU to offer 141 gigabytes (GB) of HBM3e memory at 4.8 terabytes per second (TB/s) ??that??s nearly double the capacity of the?NVIDIA H100 Tensor Core GPU?with 1.4X more memory bandwidth. The H200??s larger and faster memory accelerates generative AI and LLMs, while advancing scientific computing for HPC workloads with better energy efficiency and lower total cost of ownership.

Unleashing AI Acceleration for Mainstream Enterprise Servers With H200 NVL

The NVIDIA H200 NVL is the ideal choice for customers with space constraints within the data center, delivering acceleration for every AI and HPC workload regardless of size. With a 1.5X memory increase and a 1.2X bandwidth increase over the previous generation, customers can fine-tune LLMs within a few hours and experience LLM inference 1.8X faster.