Nvidia Tesla Series Graphic Card

Read More

Product Filter

Showing all 15 results

-

Tesla A100 80GB PCI-E NVIDIA GPU Graphic Card NVA100TCGPU80-KIT

$Quote1 Piece(MOQ)Minimum Order Quantity

The Tesla A100 80GB PCI-E NVIDIA GPU (NVA100TCGPU80-KIT) is a high-performance data center graphics card designed for AI, machine learning, and high-performance computing workloads. Featuring 80GB of high-bandwidth HBM2e memory and PCI-E Gen4 interface, it delivers exceptional throughput and scalability. Built on NVIDIA’s Ampere architecture, it supports multi-instance GPU (MIG) technology, enabling secure and efficient resource partitioning. With third-generation Tensor Cores and double the performance of its predecessor, the A100 accelerates training and inference tasks across diverse AI models. Its unmatched memory capacity and compute power make it ideal for large-scale, data-intensive applications.

Brand NewBulk Order Discounts Available

-

NVIDIA H100 NVL PCIE Tensor Core GPU

$Quote1 Piece(MOQ)Minimum Order Quantity

The NVIDIA H100 NVL PCIe Tensor Core GPU is a high-performance AI accelerator designed for large-scale language models and generative AI workloads. Featuring dual H100 GPUs connected via NVLink, it delivers up to 94 GB of HBM3 memory and 600 GB/s of GPU-to-GPU bandwidth. Built on the Hopper architecture, it offers up to 6x faster performance for transformer models compared to previous generations. Key features include fourth-generation Tensor Cores, Transformer Engine for mixed-precision computing, and secure multi-instance GPU (MIG) support. Ideal for data centers, it enables efficient, scalable AI training and inference with industry-leading performance and energy efficiency.

Brand NewBulk Order Discounts Available

-

NVIDIA H200 Tensor Core GPU,141 GB HBM3e, NVLink, PCIe 5.0, 700 W

$Quote1 Piece(MOQ)Minimum Order Quantity

The NVIDIA H200 Tensor Core GPU is a high-performance accelerator designed for AI, HPC, and data analytics workloads. It features 141 GB of ultra-fast HBM3e memory, delivering exceptional bandwidth for large-scale models and datasets. With support for NVLink and PCIe 5.0, it ensures high-speed interconnectivity and scalability across systems. Operating at 700 W, the H200 offers unmatched computational power, making it ideal for demanding generative AI and large language model applications. Its cutting-edge architecture and memory capacity position it as a top-tier solution for next-generation AI infrastructure.

Brand NewBulk Order Discounts Available

-

NVIDIA A30 24GB HBM2 Memory Ampere GPU Tesla Data Center Accelerator

$Quote1 Piece(MOQ)Minimum Order Quantity

The NVIDIA A30 is a high-performance data center GPU built on the Ampere architecture, featuring 24GB of HBM2 memory for exceptional bandwidth and efficiency. Designed for AI inference, training, and high-performance computing (HPC) workloads, it delivers a balance of performance and versatility. Key features include third-generation Tensor Cores for accelerated AI processing, multi-instance GPU (MIG) support for secure workload isolation, and PCIe Gen4 for faster data throughput. Its energy-efficient design and scalability make it ideal for enterprise deployments, offering a cost-effective solution for modern data center demands.

Brand NewBulk Order Discounts Available

-

Nvidia H100 80GB PCIe Core GPUs

$Quote1 Piece(MOQ)Minimum Order Quantity

The Nvidia H100 80GB PCIe Core GPU is a high-performance accelerator designed for AI, HPC, and data analytics workloads. Built on the Hopper architecture, it features 80GB of high-bandwidth HBM3 memory and supports PCIe Gen5 for faster data transfer. Key features include Transformer Engine for optimized AI model training and inference, fourth-generation Tensor Cores for up to 6x performance improvement over previous generations, and secure multi-instance GPU (MIG) capabilities for workload isolation. Its unmatched compute power, scalability, and energy efficiency make it ideal for demanding enterprise and research applications.

Brand NewBulk Order Discounts Available

-

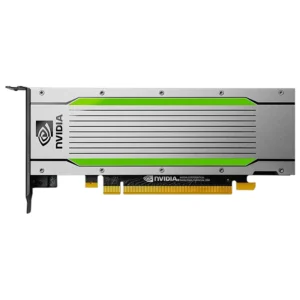

NVIDIA 900-2G183-0000-001 VCX 900-2G183-0000-001 Tesla T4 16GB GDDR6 PCIe 3.0 Passive Cooling

$Quote1 Piece(MOQ)Minimum Order Quantity

The NVIDIA Tesla T4 (900-2G183-0000-001) is a high-performance GPU accelerator designed for AI inference, machine learning, and data center workloads. Featuring 16GB of GDDR6 memory and PCIe 3.0 interface, it delivers exceptional energy efficiency and performance with a low-profile, single-slot form factor. Built on NVIDIA’s Turing architecture, it supports Tensor Cores for accelerated AI workloads and mixed-precision computing. Passive cooling enables deployment in dense server environments. Key benefits include reduced power consumption (70W TDP), scalability, and optimized performance for real-time inference, making it ideal for modern AI-driven applications.

Brand NewBulk Order Discounts Available

-

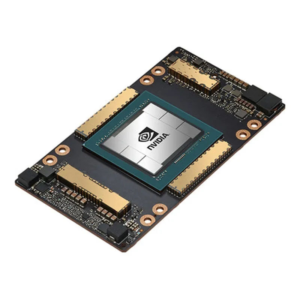

NVIDIA A100 80G SXM4

$Quote1 Piece(MOQ)Minimum Order Quantity

The NVIDIA A100 80G SXM4 is a high-performance GPU designed for data centers, delivering exceptional acceleration for AI, machine learning, and high-performance computing (HPC) workloads. Featuring 80GB of high-bandwidth HBM2e memory and third-generation Tensor Cores, it enables massive model training, real-time inference, and advanced analytics. Built on the NVIDIA Ampere architecture, it supports multi-instance GPU (MIG) technology for optimal resource utilization and scalability. Its SXM4 form factor ensures maximum throughput and energy efficiency, making it ideal for demanding enterprise and research applications.

Brand NewBulk Order Discounts Available

-

NVIDIA A30 Tensor Core GPU

$Quote1 Piece(MOQ)Minimum Order Quantity

The NVIDIA A30 Tensor Core GPU is a versatile data center accelerator designed for AI inference, training, and high-performance computing (HPC). Built on the Ampere architecture, it features 24 GB of HBM2 memory, third-generation Tensor Cores, and multi-instance GPU (MIG) support, enabling secure, efficient workload partitioning. The A30 delivers exceptional performance per watt, making it ideal for mainstream enterprise servers. Its blend of AI and HPC capabilities, energy efficiency, and scalability positions it as a cost-effective solution for modern data centers handling diverse and demanding workloads.

Brand NewBulk Order Discounts Available

-

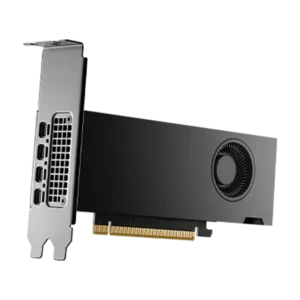

NVIDIA A2 Tensor Core GPU(Tesla A2)

$Quote1 Piece(MOQ)Minimum Order Quantity

The NVIDIA A2 Tensor Core GPU (Tesla A2) is a compact, energy-efficient accelerator optimized for entry-level AI inferencing at the edge and in data centers. Featuring the NVIDIA Ampere architecture, it delivers up to 40 TOPS of AI performance with low power consumption (40–60W), making it ideal for space- and power-constrained environments. Key features include 16GB of GDDR6 memory, PCIe Gen4 support, and a low-profile, single-slot design. Its small form factor and passive cooling enable easy deployment in a wide range of systems. The A2 excels in AI workloads such as image classification, object detection, and speech recognition, offering a cost-effective solution for scalable AI deployment.

Brand NewBulk Order Discounts Available

-

NVIDIA Tesla A30 Tensor Core GPU

$Quote1 Piece(MOQ)Minimum Order Quantity

The NVIDIA Tesla A30 Tensor Core GPU is a high-performance data center GPU designed for AI inference, training, and high-performance computing (HPC) workloads. Built on the NVIDIA Ampere architecture, it features 24 GB of ECC HBM2 memory, third-generation Tensor Cores for accelerated AI performance, and multi-instance GPU (MIG) support for secure, isolated multi-tenant environments. The A30 delivers exceptional energy efficiency and scalability, making it ideal for mainstream enterprise servers. Its balance of performance, memory, and power efficiency makes it a cost-effective solution for AI, data analytics, and scientific computing.

Brand NewBulk Order Discounts Available

-

NVIDIA A16 PCIe GPU (Tesla A16)

$Quote1 Piece(MOQ)Minimum Order Quantity

The NVIDIA A16 PCIe GPU (Tesla A16) is a high-performance graphics card designed for virtual desktop infrastructure (VDI) and multi-user workloads. Featuring four independent GPUs on a single board and 64 GB of total GDDR6 memory (16 GB per GPU), it delivers exceptional user density and performance. Built on the NVIDIA Ampere architecture, it supports hardware-accelerated AV1 decode, second-generation RT Cores, and third-generation Tensor Cores for enhanced graphics and AI capabilities. The A16 is optimized for NVIDIA Virtual PC (vPC) and Virtual Apps (vApps), enabling smooth, secure, and scalable remote work experiences. Its low power consumption and high user density make it ideal for data centers seeking efficient, cost-effective VDI solutions.

Brand NewBulk Order Discounts Available

-

NVIDIA Tesla A10 Tensor Core GPU(Tesla A10)

$Quote1 Piece(MOQ)Minimum Order Quantity

The NVIDIA Tesla A10 Tensor Core GPU is a high-performance data center GPU designed for AI inference, graphics rendering, and virtual desktop workloads. Built on the NVIDIA Ampere architecture, it features 24 GB of GDDR6 memory, 72 RT Cores, and 432 Tensor Cores, delivering up to 31.2 TFLOPS of FP32 performance. Key benefits include accelerated AI and machine learning inference, real-time ray tracing, and efficient multi-instance GPU (MIG) support for workload isolation. Its unique selling points are its ability to unify AI and graphics workloads on a single GPU, exceptional energy efficiency, and enterprise-grade reliability, making it ideal for modern data centers and virtualized environments.

Brand NewBulk Order Discounts Available

-

NVIDIA Tesla V100S 32G PCI-E

$Quote1 Piece(MOQ)Minimum Order Quantity

The NVIDIA Tesla V100S 32G PCI-E is a high-performance GPU accelerator designed for data centers, AI, and high-performance computing (HPC) workloads. Featuring 32GB of ultra-fast HBM2 memory and powered by NVIDIA’s Volta architecture, it delivers up to 7.8 TFLOPS of double-precision, 15.7 TFLOPS of single-precision, and 125 Tensor TFLOPS for AI training and inference. Its PCI-E interface ensures broad compatibility, while its large memory capacity and bandwidth (up to 1134 GB/s) enable efficient processing of massive datasets. The V100S stands out for its superior performance, scalability, and energy efficiency, making it ideal for demanding AI, deep learning, and scientific computing applications.

Brand NewBulk Order Discounts Available

-

NVIDIA Tesla P100 16 GB 4096 bit HBM2 PCI-E x16 Computational Accelerator

$Quote1 Piece(MOQ)Minimum Order Quantity

The NVIDIA Tesla P100 16 GB is a high-performance computational accelerator designed for data centers and scientific computing. Featuring 16 GB of ultra-fast HBM2 memory on a 4096-bit interface, it delivers exceptional memory bandwidth for data-intensive workloads. Built on NVIDIA’s Pascal architecture, it offers up to 5.3 teraflops of double-precision performance, making it ideal for AI, deep learning, and HPC applications. With PCI-E x16 connectivity, it ensures broad compatibility and efficient integration. Its key advantages include massive parallel processing power, energy efficiency, and scalability, making it a top choice for accelerating complex computations and reducing time to insight.

Brand NewBulk Order Discounts Available

-

NVIDIA Tesla A100 Ampere 40 GB SXM4 Graphics Processor Accelerator – PCIe 4.0 x16 – Dual Slot

$Quote1 Piece(MOQ)Minimum Order Quantity

The NVIDIA Tesla A100 Ampere 40 GB SXM4 is a high-performance GPU accelerator designed for data centers, AI, and high-performance computing workloads. Built on the Ampere architecture, it features 40 GB of high-bandwidth HBM2 memory and supports PCIe 4.0 x16 for faster data throughput. Key features include third-generation Tensor Cores for accelerated AI training and inference, multi-instance GPU (MIG) technology for workload isolation, and high energy efficiency. Its dual-slot SXM4 form factor ensures optimal thermal performance. The A100 delivers unmatched scalability, making it ideal for demanding applications in AI, data analytics, and scientific computing.

Brand NewBulk Order Discounts Available